GC Enterprise Architecture/Framework/DataGuide

Information architecture[edit | edit source]

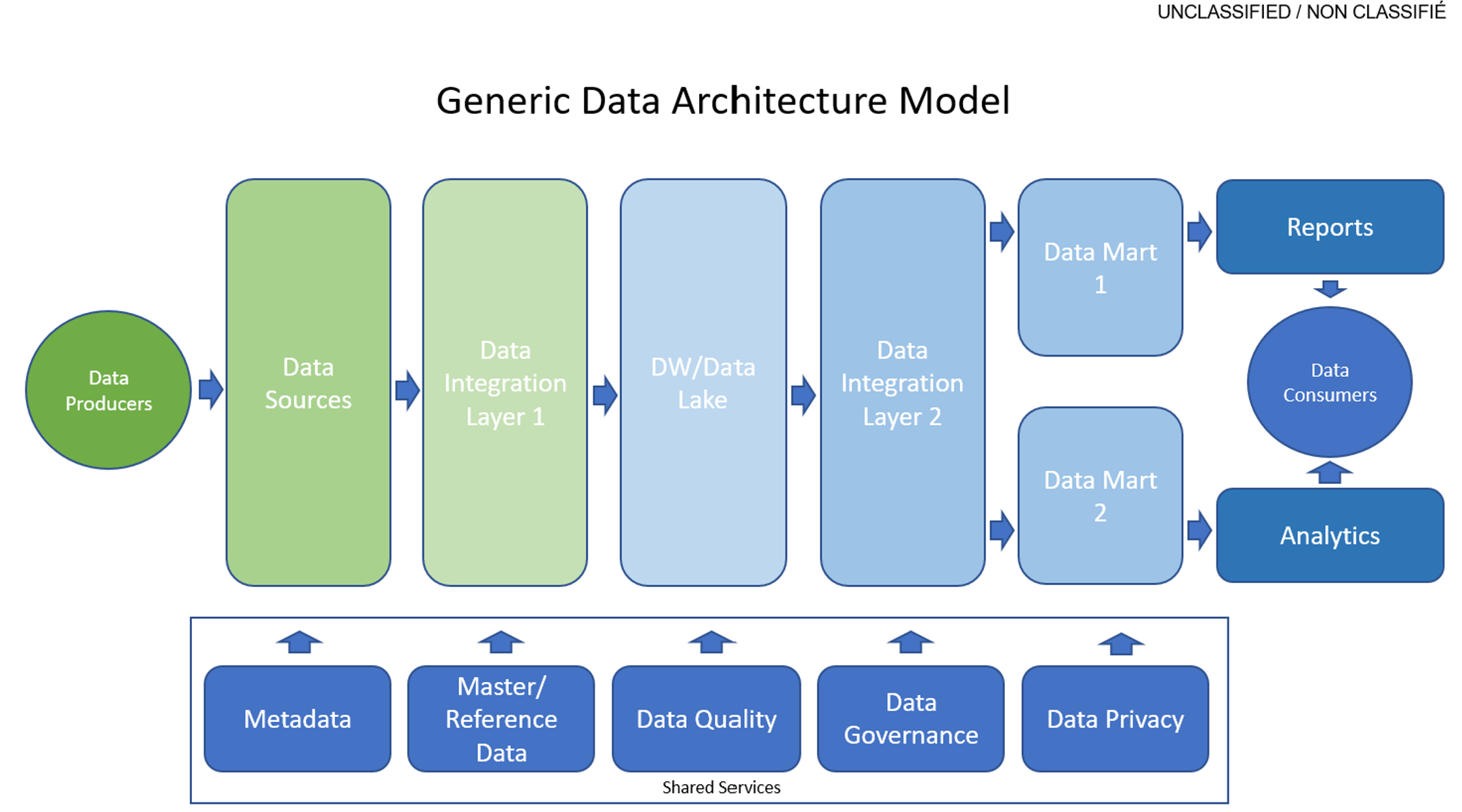

Information architecture is defined as the management and organization of data for a business. The best practices and principles aim to support the needs of a business service and business capability orientation. To facilitate effective sharing of data and information across government, information architectures should be designed to reflect a consistent approach to both structured and unstructured data, such as the adoption of federal and international standards. Information architecture should also reflect responsible data management, information management and governance practices, including the source, quality, interoperability, and associated legal and policy obligations related to the data assets. Information architectures should also distinguish between personal and non‑personal data. How personal information is treated such as its collection, use, sharing (disclosure), and management must respect the requirements of the Privacy Act and its related policies. Under this paragraph is a model of Data Architecture demonstrating the core components that data flows through in an enterprise to obtain insights and analytics.

Data Product: A service or device that collects, processes, and stores data for a business. Data producers also monitor the data obtains to ensure the quality of the data asset.

Data Source: A data source is made up of fields and groups. In the same way that folders on your hard disk contain and organize your files, fields contain the data that users enter into forms that are based on your form template, and groups contain and organize those fields.

Data Integration: Data integration is the process for combining data from several disparate sources to provide users with a single, unified view.

Integration is the act of bringing together smaller components into a single system so that it's able to function as one. And in an IT context, it's stitching together different data subsystems to build a more extensive, more comprehensive, and more standardized system between multiple teams, helping to build unified insights for all.

Data Lake: A data lake is a centralized repository that ingests and stores large volumes of data in its original form. The data can then be processed and used as a basis for a variety of analytic needs. Due to its open, scalable architecture, a data lake can accommodate all types of data from any source, from structured (database tables, Excel sheets) to semi-structured (XML files, webpages) to unstructured (images, audio files, tweets), all without sacrificing fidelity. The data files are typically stored in staged zones—raw, cleansed, and curated—so that different types of users may use the data in its various forms to meet their needs. Data lakes provide core data consistency across a variety of applications, powering big data analytics, machine learning, predictive analytics, and other forms of intelligent action.

Data Mart: A data warehouse is a system that aggregates data from multiple sources into a single, central, consistent data store to support data mining, artificial intelligence (AI), and machine learning—which, ultimately, can enhance sophisticated analytics and business intelligence. Through this strategic collection process, data warehouse solutions consolidate data from the different sources to make it available in one unified form.

A data mart (as noted above) is a focused version of a data warehouse that contains a smaller subset of data important to and needed by a single team or a select group of users within an organization. A data mart is built from an existing data warehouse (or other data sources) through a complex procedure that involves multiple technologies and tools to design and construct a physical database, populate it with data, and set up intricate access and management protocols.

Data Consumers: Data consumers are services or applications, such as Power BI or Dynamics 365 Customer Insights, that read data in Common Data Model folders in Data Lake Storage Gen2. Other data consumers include Azure data-platform services (such as Azure Machine Learning, Azure Data Factory, and Azure Databricks) and turnkey software as a service (SaaS) applications (such as Dynamics 365 Sales Insights). A data consumer might have access to many Common Data Model folders to read content throughout the data lake. If a data consumer wants to write back data or insights that it has derived from a data producer, the data consumer should follow the pattern described for data producers above and write within its own file system.

Outlined in the points below, are objectives to be fulfilled in order to maintain information architecture standards.

Collect data to address the needs of the users and other stakeholders[edit | edit source]

Collecting data is an important activity to define a scope that meets all stakeholder requirements. Stakeholder requirements refer to what is expected out of an activity such as business capabilities being addressed, resource requirements, and timeline. Assessing these requirements will make it easier to draw boundaries around the activity and ensure that a high-quality result is being delivered. To track and demonstrate that requirements are being fulfilled throughout the activity, data must be collected first. Data collection is a systematic process of gathering observations for the purposes of addressing programs or policies in a business or the government.

The initial step to collecting data is to identify what information needs to be gathered and the source of data. For example, in creating a centralized system for a patient database at a hospital, once the user requirements are identified, the process of how data is collected should be considered. There are several ways of collecting data, such as interviews, surveys/ questionnaires, workshops, and observing consumers. The goal is to gather these requirements and prioritize them. Questions for surveys or interviews must be consistent to keep the data organized and easier to prioritize. The data itself should be reusable and easily transportable to another system to save time and money.

- assess program objectives based on data requirements, as well as users, business and stakeholder needs

How to achieve:

* Summarize how the architecture meets the data needs of the users and other key stakeholders including:

* How does the data asset contribute to outcomes/needs of the user and other stakeholders

* Gaps in the existing data assets to meet the needs of the users and other stakeholders and how the architecture addresses these gaps

* Gaps in data collection and analysis and how the architecture is addressing it so that department can ensure that we are serving the members of our society

* Alignment to the data foundation of the departmental information/data architecture practice

* Alignment to the theoretical foundation of the departmental information/data architecture practice

Tools:

* For Data Foundation – Implement:

* Data Catalogue

* Benefits Knowledge hub

* Data Lake (growth)

* Data Science and Machine Platform

* Stakeholder Requirements

* Solution Requirements

- collect only the minimum set of data needed to support a policy, program, or service

How to achieve:

* Summarize how the architecture aligns to “collect with a purpose” including:

* What is necessary (as opposed to what is sufficient) to meet the stakeholder need

* Supporting Performance Information Profiles (PIPs) used to assess a progress towards target and broader objectives

Tools:

* Value Stream (Value Item and Value Proposition – Context on what we measure)

* KPI (Linked to benefits, outcomes and objectives)

- reuse existing data assets where permissible and only acquire new data if required

How to achieve:

* Summarize reusability of the architecture’s data assets given:

* Context of data assets and user and stakeholder needs

* Data quality and fit for purpose

* Privacy and Security Regulatory Framework

Tools:

* Legislative / Regulations

- ensure data collected, including from third-party sources, are of high quality

How to achieve:

* Summarize how the architecture meets the data quality requirements of third-party sources:

* Data quality meets fit for purpose

* Data quality dimensions including:

* Relevance,

* Timeliness

* Consistency,

* Reliability,

* Interpretability,

* Usability

* Data quality mechanism

Tools:

* Data Foundation – Implement (Leverage the standard definition)

* Data Catalogue

* Benefits Knowledge hub

* Data Lake (growth)

* Data Science and Machine Platform

Manage and reuse data strategically and responsibly[edit | edit source]

Data architecture is defined as the management of data by translating the business requirements into technical requirements for an organization. The management of data refers to the collection, storage, and usage of data in an information system. Furthermore, data management and the direction of its flow are guided by various framework of models, policies, rules, and standards used by the organization. They both provide a foundation to work efficiently with data as well as to govern data access by establishing roles, responsibilities and accountabilities. For example, an organization may have a system that they have conceptualized to store information. To ensure that the system succeeds in doing so, it must satisfy adequate data storage capabilities as well as role-based access functionalities. To assess said capabilities, an organization should review the system’s functions and compare them to the user/stakeholder requirements to ensure adequate support to organizational policies. The system must also ensure data lineage is maintained to be able to trace back data to its origin.

Data architectures define and set data standards and principles. To accomplish the process of translating business requirements into technical requirements, some duties may entail creating blueprints for data flow and data management as well as assessing potential data sources. Plans may be devised to make these sources accessible to all employees and keep them protected according to existing security and privacy policies. Data architecture identifies [data] consumers within an organization, then align with their varying requirements and allow them access at any moment with a synchronous process to deliver usable data. For example, within a hospital, nurses and doctors utilize and work with patient data. Depending on who it is, some may be required to update data such as illness or prescribed medicine, and some should view and direct based on the data, to coordinate rooms and available medical machines. It is a necessity to have a centralized system, or systems that are interoperable, with varying features and permissions to be able to access all this information at any given time. Otherwise, if non-interoperable multiple systems for different data sets were used, it would be difficult to maintain the flow of data throughout the hospital, which would cause loss opportunities of time-sensitive action that can harm patients in critical conditions.

- define and establish clear roles, responsibilities, and accountabilities for data management

How to achieve:

* Summarize how the architecture assists in defining key data management roles and their responsibilities to ensure data is correct, consistent, and complete including:

* Identifies the data steward responsibilities;

* Identifies the data consumer responsibilities, and;

* Identifies the data custodian responsibilities.

Tools:

* Stakeholders

* Business Process Model

* Functional Requirements

* Business Glossary

- identify and document the lineage of data assets

How to achieve:

* Summarize how the architecture’s data assets demonstrate alignment with department's data governance and strategy including:

* Alignment to the data foundation of the ESDC information/data architecture practice

* Alignment to the theoretical foundation of the ESDC information/data architecture practice

Tools:

* Target state (solution data elements)

* Data Foundation – Implement (Leverage the standard definition)

* Data Catalogue

* Benefits Knowledge hub

* Data Lake (growth)

* Data Science and Machine Platform

* Theoretical Foundation

* EDRM (Conceptual and Logical)

* Business Glossary

* Departmental Data Strategy

- define retention and disposition schedules in accordance with business value as well as applicable privacy and security policy and legislation

How to achieve:

* Summarize for each key data assets:

* Retention and disposition schedules

* Disposition process

Tools:

* Target state (solution data elements)

* Non Functional Requirements

* IM Best Practices and Standards

- ensure data are managed to enable interoperability, reuse and sharing to the greatest extent possible within and across departments in government to avoid duplication and maximize utility, while respecting security and privacy requirements

How to achieve:

* Summarize how the architecture enables interoperability, reuse and sharing to the greatest extent possible within and across departments

* Summarize how the architecture avoids data duplication

Tools:

* Target State

* Data Foundation – Implement (Leverage the standard definition)

* Data Catalogue

* Benefits Knowledge hub

* Data Lake (growth)

- contribute to and align with enterprise and international data taxonomy and classification structures to manage, store, search and retrieve data

How to achieve:

* Summarize the alignment to departmental/GC:

* Data taxonomy structure

* Data classification structure

Tools:

* Data Foundation – Implement (Leverage the standard definition)

* Data Catalogue

* Theoretical Foundation

* EDRM (Conceptual and Logical)

* Business Glossary

[edit | edit source]

Organizations should be able to adhere to ethical guidelines on data sharing to address and meet emerging standards and legislative requirements. It is an organization’s responsibility to apply transparency and to respect how data is used within the organization. Using and sharing data in an ethical manner can build trust between the public and the organization. Failing to prioritize privacy, security, consent, and ownership of data can negatively harm an organization’s reputation and credibility (and create the risk of extinction). To share data ethically and legally, an organization must request participants’ consent. How personal data will be used and shared must be communicated transparently to avoid misleading anyone. Furthermore, to keep data private and more generic for future sharing purposes, it can be anonymized by removing participant’s tombstone information such as name, address, and occupation. If data anonymization is considered, it is ideal to plan it during the collection phase. It is necessary to inform third party readers when data has been anonymized. This may be done by using markings in the text for contents that have been previously removed. Additionally, an original data repository copy should always be kept separately and secured to keep a record of all data that has been anonymized in the final product. Third party readers should have valid reasons and the right qualifications to access the original data to ensure data is treated in a careful manner. Data must not be shared when: there is a conflict of interest with the need to protect personal identities; when an organization does not have ownership of the data; and when releasing the data presents a security risk.

- share data openly by default as per the Directive on Open Government and Digital Standards, while respecting security and privacy requirements; data shared should adhere to existing enterprise and international standards, including on data quality and ethics

How to achieve:

* Summarize how the architecture supports sharing data openly by default as per Directive on Open Government and Digital Standards given:

* Existing departmental and GC data standards and policies

* International data standards; and the Privacy Act,

* Fitness for purpose

* Ethics

Tools:

* Data Foundation – Implement (Leverage the standard definition)

* Data Catalogue

* Benefits Knowledge Hub

* Data Lake (growth)

* Data Science and Machine Platform

* Theoretical Foundation

* EDRM (Conceptual and Logical)

* Business Glossary

* Departmental Data Strategy

- ensure data formatting aligns to existing enterprise and international standards on interoperability; where none exist, develop data standards in the open with key subject matter experts

How to achieve:

* Summarize how the architecture utilises existing enterprise and international data standards

* Summarize how the architecture has developed any data standards through open collaboration with key subject matter experts and the Enterprise Data Community of Practice.

Tools:

* Data Standards

* NIEM

* OpenData

* National Address Register

* Reference Data Repository

- ensure that combined data does not risk identification or re‑identification of sensitive or personal information

How to achieve: * Summarize how the architecture ensures the aggregation and combing of data does not pose a risk to information sensitivity or personal information

Design with privacy in mind for the collection, use and management of personal Information[edit | edit source]

Keeping data protected in a company is important to keep sensitive information such as industry knowledge and personal information private and solely on a company server. It is a legal requirement for companies as outlined in the Privacy Act. Furthermore, when companies work with clients who are investing their money and time, it is expected for them to ensure that data will be held safely at the minimum. Data privacy can protect the reputation and credibility of a company as unprotected data can often fall victim to hackers and outside sources. For example, within a hospital, patient personal information should be kept secure and away from the public. This is expected of hospitals and failing to comply can result in legal and ethical issues. Additionally, it would be dangerous for strangers to get access to patient information such as their address and health implications.

- ensure alignment with guidance from appropriate institutional ATIP Office with respect to interpretation and application of the Privacy Act and related policy instruments

How to achieve: * Describe how the architecture aligns to guidance of the ATIP Office around personal information regulatory framework; policy framework; and consent directives

- assess initiatives to determine if personal information will be collected, used, disclosed, retained, shared, and disposed

How to achieve: * Has the initiative assessed if personal information will be collected, used, disclosed, retained, shared, and disposed

- only collect personal information if it directly relates to the operation of the programs or activities

How to achieve: * Summarize how the architecture ensures the personal information collected is directly required to the operational of the programs or activities

- notify individuals of the purpose for collection at the point of collection by including a privacy notice

How to achieve: * Does the solution’s privacy notice provide the purpose for collecting this personal information * Does the solution provide a privacy notice at the point of personal information collection

- personal information should be, wherever possible, collected directly from individuals but can be from other sources where permitted by the Privacy Act

How to achieve: * Does the architecture collect personal information directly from the individual * If no, what personal information is collect form other sources and does it comply with the Privacy Act and the consent directive of the source Tools: * Target State Architecture * Interim State Architecture

- personal information must be available to facilitate Canadians’ right of access to and correction of government records

How to achieve: * Summarize how the architecture facilitates Canadian's right to access their personal information records * Summarize how the architecture facilitates Canadian's right to correct their personal information records Tools: * Target State Architecture * Interim State Architecture

- design access controls into all processes and across all architectural layers from the earliest stages of design to limit the use and disclosure of personal information

How to achieve: * Summarize how the architecture limits the use and disclosure of personal information in accordance to the privacy legislative; policy frameworks and consent directives

- design processes so personal information remains accurate, up‑to‑date and as complete as possible, and can be corrected if required

How to achieve: * Summarize how the architecture ensures personal information remains accurate * Summarize how the architecture ensures personal information remains up-to-date * Summarize how the architecture ensures personal information remains complete as possible * Summarize how the architecture ensures personal information can be corrected if required Tools: * Non Functional Requirements * FUnctional Requirements

- de‑identification techniques should be considered prior to sharing personal information

How to achieve: * Outline the de-identification techniques used by the architecture in sharing personal information

- in collaboration with appropriate institutional ATIP Office, determine if a Privacy Impact Assessment (PIA) is required to identify and mitigate privacy risks for new or substantially modified programs that impact the privacy of individuals

How to achieve: * Describe how the architecture addresses the recommendations of the PIA * If not all recommendations of the PIA are being addressed, outline how the business will address any residual risks of the PIA

- establish procedures to identify and address privacy breaches so they can be reported quickly and responded to efficiently to appropriate institutional ATIP Office

How to achieve: * Are procedures established to identify and address privacy breaches * Summarize how the architecture enables/supports these procedures Tools: * Business Process Model