Important: The GCConnex decommission will not affect GCCollab or GCWiki. Thank you and happy collaborating!

Towards a Responsible AI Framework for the Design of Automated Decision Systems in DFO: a Case Study of Bias Detection and Mitigation

Executive Summary

Automated decision systems are computer systems that automate, or assist in automating, part or all of an administrative decision-making process. Currently, automated decision systems driven by Machine Learning (ML) algorithms are being used by the Government of Canada to improve service delivery. The use of such systems can have benefits and risks for federal institutions, including the Department of Fisheries and Oceans Canada (DFO). There is a need to ensure that automated decision systems are deployed in a manner that reduces risks to Canadians and federal institutions and leads to more efficient, accurate, consistent, and interpretable decisions.

In order to support these goals, the Office of the Chief Data Steward (OCDS) is developing a data ethics framework which will provide guidance on the ethical handling of data and the responsible use of Artificial Intelligence (AI). The guidance material on the responsible use of AI addresses 6 major themes that have been identified as being pertinent to DFO projects using AI. These themes are Privacy and Security, Transparency, Accountability, Methodology and Data Quality, Fairness, and Explainability. While many of these themes have a strong overlap with the domain of data ethics, the theme of Fairness covers many ethical concerns unique to AI due to the nature of the impacts that bias can have on AI models.

Supported by the (2021 – 2022) Results Fund, the OCDS and IMTS are prototyping automated decision systems, based on the outcome of the AI pilot project. The effort includes defining an internal process to detect and mitigate bias, as a potential risk of ML-based automated decision systems. A case study is designed to apply this process to assess and mitigate bias in a predictive model for detecting vessels’ fishing behavior. The process defined from this work and the results of the field study will contribute towards the guidance material that will eventually form the responsible AI component of the data ethics framework.

Introduction

Unlike traditional automated decision systems, ML-based automated decision systems do not follow explicit rules authored by humans [1]. ML models are not inherently objective. Data scientists train models by feeding them a data set of training examples, and the human involvement in the provision and curation of this data can make a model's predictions susceptible to bias. Due to this, applications of ML-based automated decision systems have far-reaching implications for society. These range from new questions about the legal responsibility for mistakes committed by these systems to retraining for workers displaced by these technologies. There is a need for a framework to ensure that accountable and transparent decisions are made, supporting ethical practices.

Responsible AI

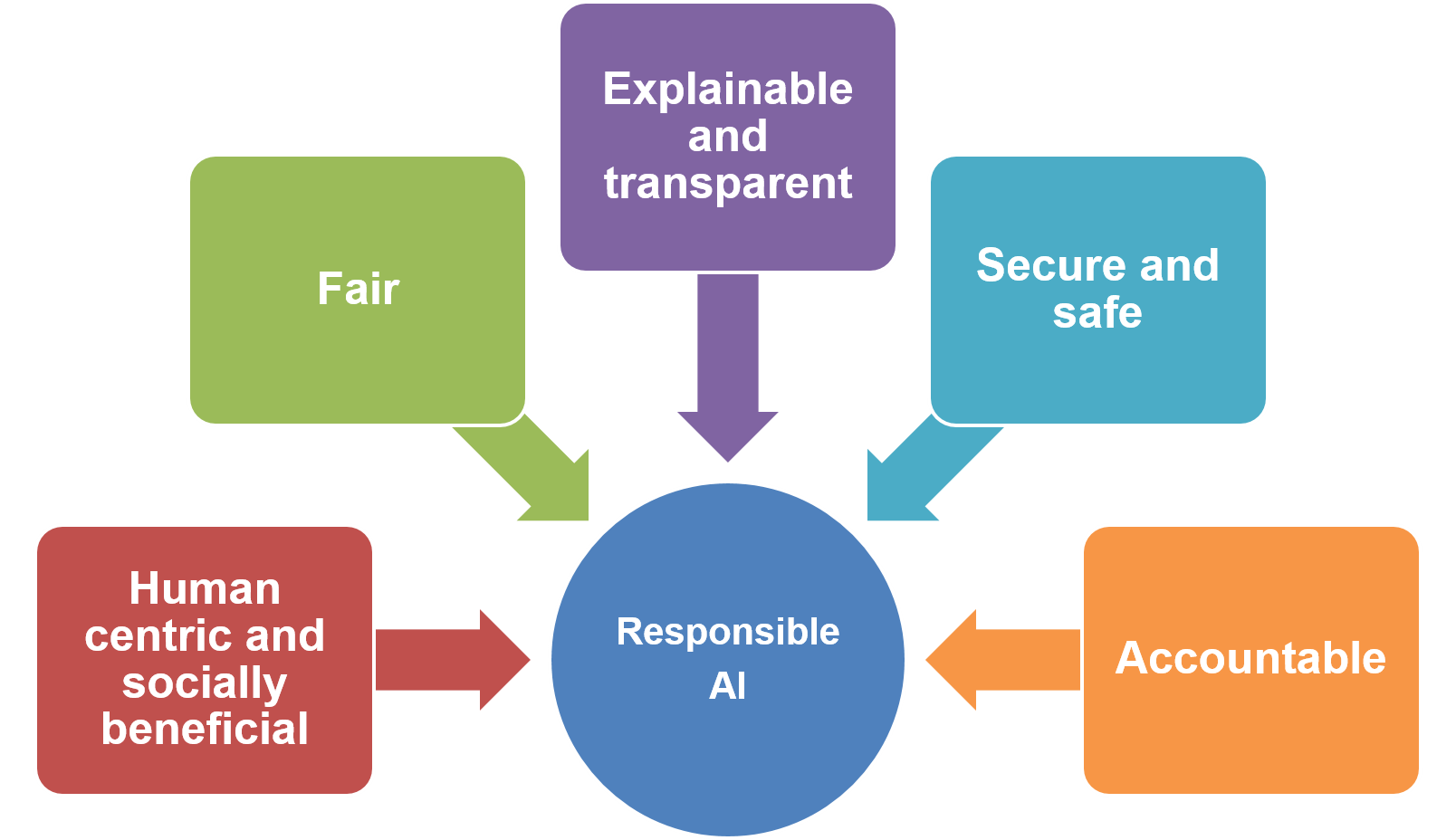

Responsible AI is a governance framework that documents how a specific organization is addressing the challenges around AI from both an ethical and a legal point of view.

In an attempt to ensure Responsible AI practices, organizations have identified guiding principles to guide the development of AI applications and solutions. According to the research “The global landscape of AI ethics guidelines” [2] , some principles are mentioned more often than others. However, Gartner has concluded that there is a global convergence emerging around five ethical principles [3]:

- Human-centric and socially beneficial

- Fair

- Explainable and transparent

- Secure and safe

- Accountable

The definition of the various guiding principles is included in [2].

The Treasury Board Directive on Automated Decision-Making

The Treasury Board Directive on Automated Decision-Making is defined as a policy instrument to promote ethical and responsible use of AI. It outlines the responsibilities of federal institutions using AI-based automated decision systems.

The Algorithmic Impact Assessment Process

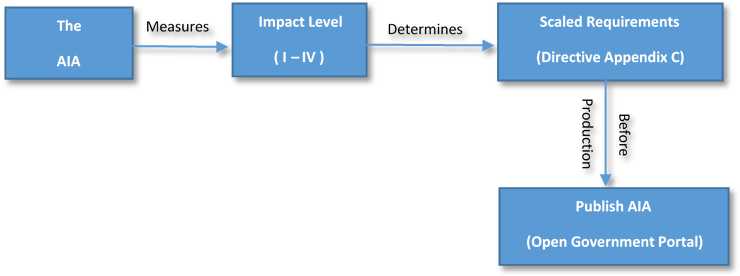

According to the Directive, an Algorithmic Impact Assessment (AIA) must be conducted before the production of any automated decision systems to assess the risks of the system. The assessment must be updated at regular intervals when there is a change to the functionality or the scope of the Automated Decision System. The AIA tool is a questionnaire that determines the impact level of an automated decision system. It is composed of 48 risk and 33 mitigation questions. Assessment scores are based on many factors including systems design, algorithm, decision type, impact, and data. The tool results in an impact categorization level between I (presenting the least risk) and IV (presenting the greatest risk). Based on the impact level (which will be published), the Directive may impose additional requirements. The process is described in Figure 2.

Bias Detection and Mitigation

To comply with the Treasury Board Directive on automated decision-making, DFO is working on establishing an internal process, as part of the Results Fund project for 2021 – 2022, to detect and mitigate potential bias in ML-based automated decision systems.

Bias Detection and Mitigation Process

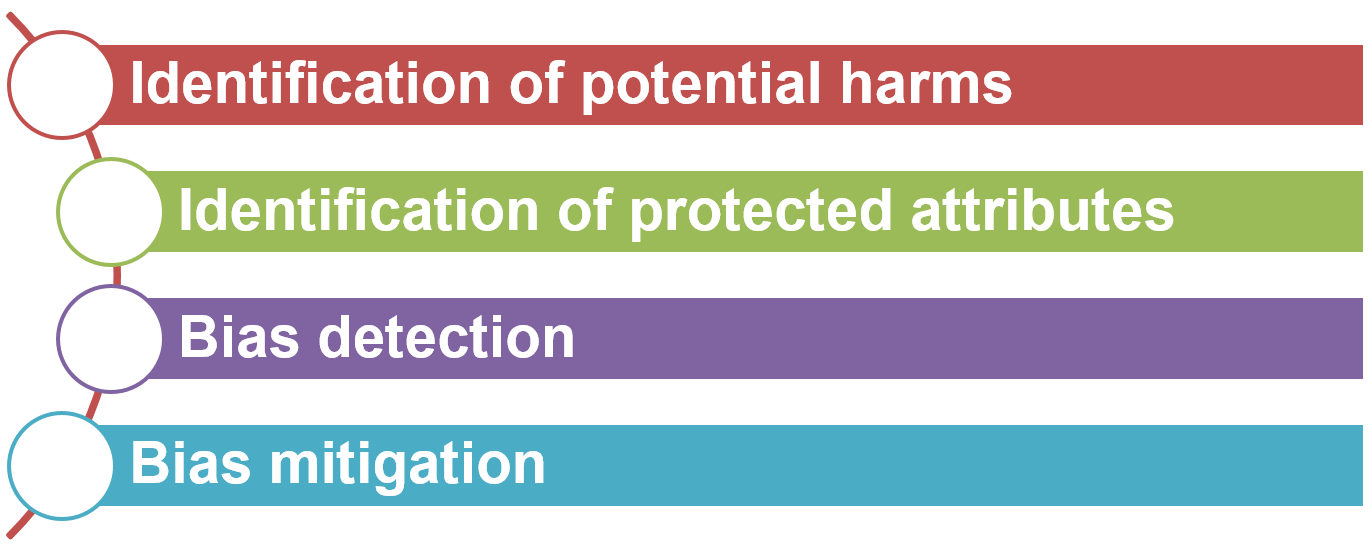

The process of bias detection and mitigation is dependent upon the identification of the context within which bias is to be assessed. Given the breadth of sources from which bias can originate, exhaustive identification of the sources relevant to a particular system and the quantification of their impacts can be impractical. As such, it is recommended to instead view bias through the lens of harms that can be induced by the system [4]. Common types of harm can be represented through formal mathematical definitions of fairness in decision systems. These definitions provide the foundation for the quantitative assessment of fairness as an indicator of bias in systems.

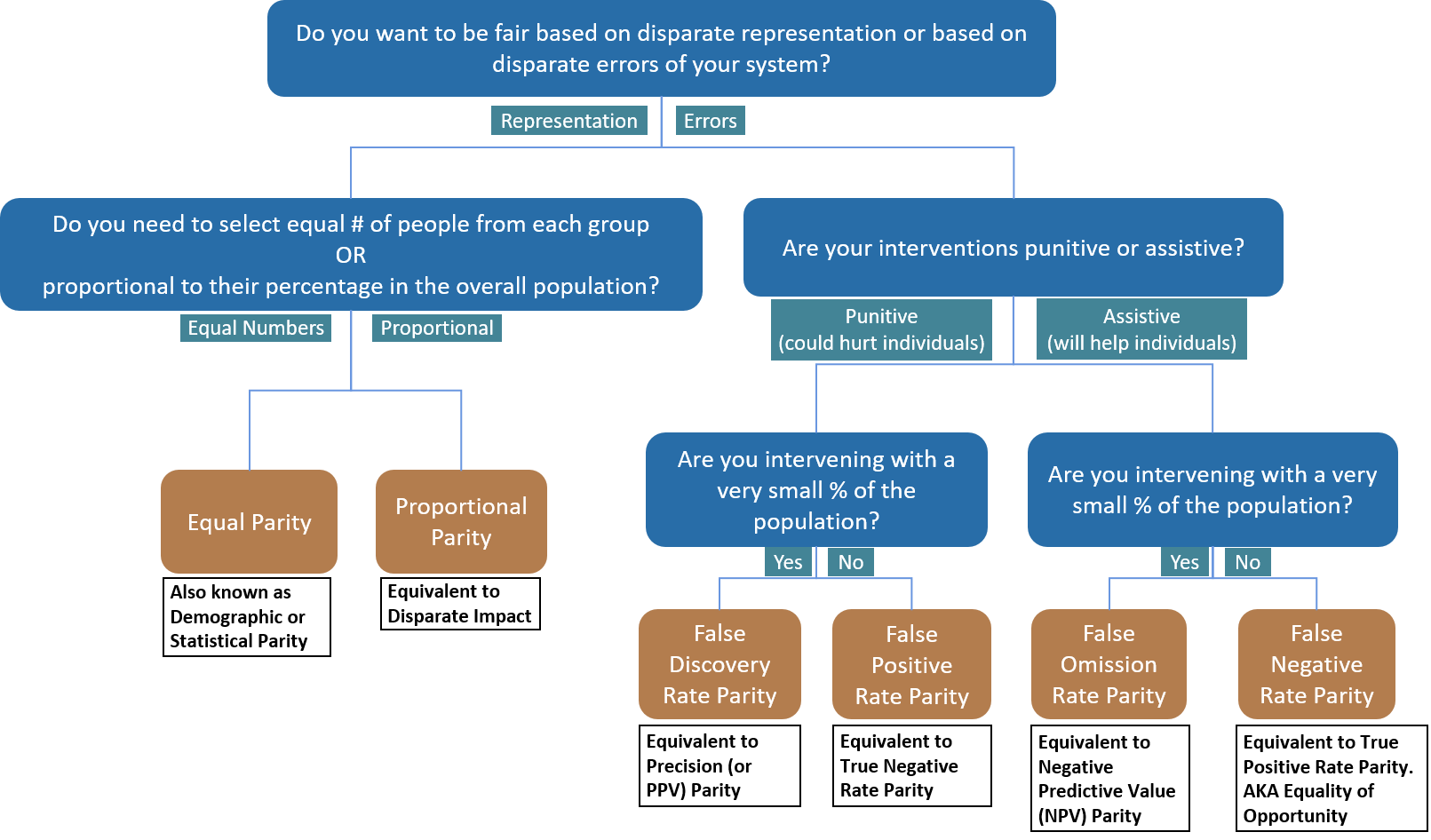

Harms are typically considered in the context of error rates and/or rates of representation. Differences in error rates across different groups of people induce disproportionate performance of the system, leading to unjust consequences or reduced benefits for certain groups. Differences in rates of representation, even in the absence of differences in rates of error, can lead to unfair distributions of benefits or penalties across groups of people. The types of harm applicable to a particular system are highly dependent upon the intended usage of the system. A flow chart to assist in identification of the metric of fairness most relevant to a system is provided in Figure 3.

Having laid out the potentials for harm in the system, the next step is to identify protected attributes. In the context of bias detection and mitigation, a protected attribute refers to a categorical attribute for which there are concerns of bias across the attribute categories. Common examples of protected attributes are race and gender. Relevant protected attributes for the application of the system must be identified in order to investigate disproportionate impacts across the attribute categories.

Once the assessment of potential harms and affected groups has been conducted, bias detection and mitigation tools can be applied to attempt to remedy issues of unfairness in the system. This overall process can thus be broken up into four steps:

Bias Detection and Mitigation Tools

There is a growing number of technical bias detection and mitigation tools, which can supplement the AI practitioner’s capacity to avoid and mitigate AI bias. As part of the 2021 – 2022 Results Fund project, DFO has explored the following bias detection tools that AI practitioners can use to detect and remove bias.

Microsoft’s FairLearn

An open-source toolkit by Microsoft that allows AI practitioners to detect and correct the fairness of their AI systems. To optimize the trade-offs between fairness and model performance, the toolkit includes two components: an interactive visualization dashboard and bias mitigation algorithms [4].

IBM AI Fairness 360

An open-source toolkit by IBM that helps AI practitioners to easily check for biases at multiple points along their ML pipeline, using the appropriate bias metric for their circumstances. It comes with more than 70 fairness metrics and 11 unique bias mitigation algorithms [6].

Case Study: Predictive Model for Detecting Vessels’ Fishing Behavior

In this section, details are provided on an investigation into one of the AI pilot project proof-of-concepts using the proposed Bias Detection and Mitigation Process. Through this investigation, further information can be gleaned into the practical considerations of bias detection and mitigation.

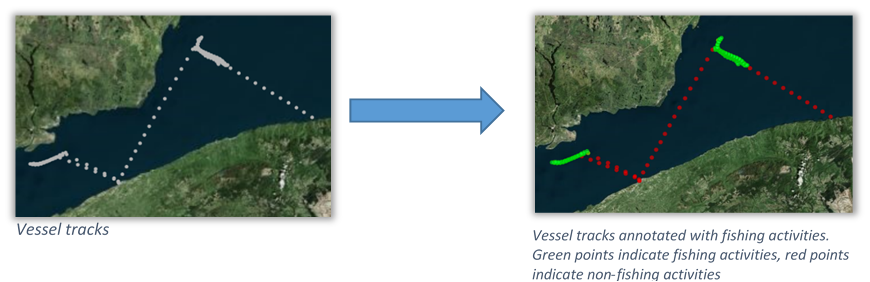

Through the AI work funded by the 2020 – 2021 Results Fund, a proof-of-concept was developed for the automated detection of vessel fishing activity using an ML model. The resulting predictive model takes vessel movement tracks as input and outputs the vessel tracks annotated with its activity i.e. fishing or not-fishing, as illustrated in Figure 4. The model uses features such as vessel speed standard deviation, course standard deviation, and gear type to detect vessel activity. The insight gained from the predictive model is then combined with other data sources, such as fisheries management areas and license conditions to detect non-compliance with fisheries regulations.

The fishing detection predictive model will be used as the backbone for building an automated decision system for the detection of non-compliant fishing behavior. After consultation with the Treasury Board of Canada, DFO has established that the Treasury Board Directive on Automated Decision Systems applies to the production of the model.

To comply with the Directive, and to ensure that the resulting automated decision system is both effective and fair, an investigation has been conducted into the potential for bias in the fishing detection model, and bias mitigation techniques have been applied.

Terms and Definitions

Bias: In the context of automated decision systems, the presence of systematic errors or misrepresentation in data and system outcomes. Bias may originate from a variety of sources including societal bias (e.g., discrimination) embedded in data, unrepresentative data sampling and preferential treatment imparted through algorithms.

Disparity: A systematic lack of fairness across different groups. Formally measured as the maximal difference between the values of a metric applied across the groups defined by a protected attribute.

False negative: An error in binary classification in which a positive instance is incorrectly classified as negative.

False positive: An error in binary classification in which a negative instance is incorrectly classified as positive.

False positive (negative) rate: The percentage of negative (positive) instances incorrectly classified as positive (negative) in a sample of instances to which binary classification is applied.

Protected attribute: An attribute that partitions a population into groups across which fairness is expected. Examples of common protected attributes are gender and race.

Results of Bias Assessment

The bias assessment process has been conducted following the four steps proposed in the Bias Detection and Mitigation Process. Details on the results of the investigation for each of the steps are provided below.

Identification of Potential Harms

The investigation was initiated with an assessment of the potential for harms induced by bias in the system. For the use case of non-compliance detection, there always exists an objective ground truth. That is to say, through complete knowledge of the actions taken by a fishing vessel and the conditions specified in a fishing license, it can be objectively determined whether or not a vessel is in compliance with its license conditions. When a system is able to correctly make this determination with perfect consistency, there would be no potential for harm through biased decisions made by the system. However, due to the practical constraints of incomplete information (e.g., fishing activity of the vessels), perfect accuracy is not realistically achievable. Thus, the potential for harm exists in the instances where the system makes errors in its decisions.

In the binary (i.e., yes or no) detection of fishing activity, the system is susceptible to two types of errors:

- False positives – Reporting fishing activity when it has not occurred

- False negatives – Reporting an absence of fishing activity when in fact it has occurred

When the fishing detections are checked against license conditions, these errors can then take on two alternate forms:

- False positives may lead to incorrect reporting of non-compliance

- False negatives may lead to missed detections of non-compliance

As the system is designed to assist in the identification of cases in which punitive action is required, the false positives have the potential to cause harms to the operators of the vessels that are incorrectly flagged for non-compliance. It is important to note that the intended use of this system is not to make final determinations on the occurrence of non-compliant behavior but rather to assist fishery officers in the identification of cases that warrant further investigation. False positives are therefore unlikely to result in undue punitive actions. However, they result in unwarranted scrutiny of vessel activities, which is both undesirable and unfair to vessel operators. While perfect detection of fishing activity may be unattainable, it is nevertheless critical to put in best efforts to reduce error rates and to mitigate disproportionate (i.e., biased) impacts across different groups of people.

Although false positives have been identified as the primary source of potential harm through this investigation, it is important to note that some degree of less direct harm exists in false negatives as well. Missed detections of non-compliance may result in illegal fishing going uncaught. This has a cost to both DFO as well as the commercial fisheries. Should certain fisheries or geographic areas be more susceptible to false negatives than others, this may lead to disproportionate distributions of these costs.

Identification of Protected Attributes

In the context of the proof-of-concept system, disproportionate impact refers to a difference in the expected rate of errors across different groups of vessels. In other words, if certain types of vessels are more susceptible to false positives than others, this is indicative of bias in the system. Identification of meaningful groups into which to partition the population is in itself a non-trivial task. In this investigation, vessels were partitioned into groups based on the fishing gear type used by the vessel. The reasons for this decision are twofold. First, the behavior of a vessel is impacted by the type of gear it is using, thus it is to be expected that there will be differences in system performance across gear types. These differences must be carefully examined to determine whether they contribute to a lack of fairness in the system. Second, data labelled with both a ground truth (fishing activity) and the protected attribute (gear type) is a requisite for quantitative analysis of model behavior. The decision was therefore partially motivated by constraints on the available data. Investigation into groups based on indigenous status is also an important goal and is planned to be investigated during field study stages where richer sources of data are available.

Bias Detection

To assess model fairness across different gear types, the data is first partitioned according to the gear type and then False Positive Rate (FPR) disparity [6] is measured. The FPR is given by the percentage of negative instances (of fishing activity) that are mislabeled by the model as being positives instances. The FPR disparity is measured as the greatest difference between the FPR of each grouping of vessels by gear type. The greater this difference is measured to be, the greater the degree of bias and unfairness in the system.

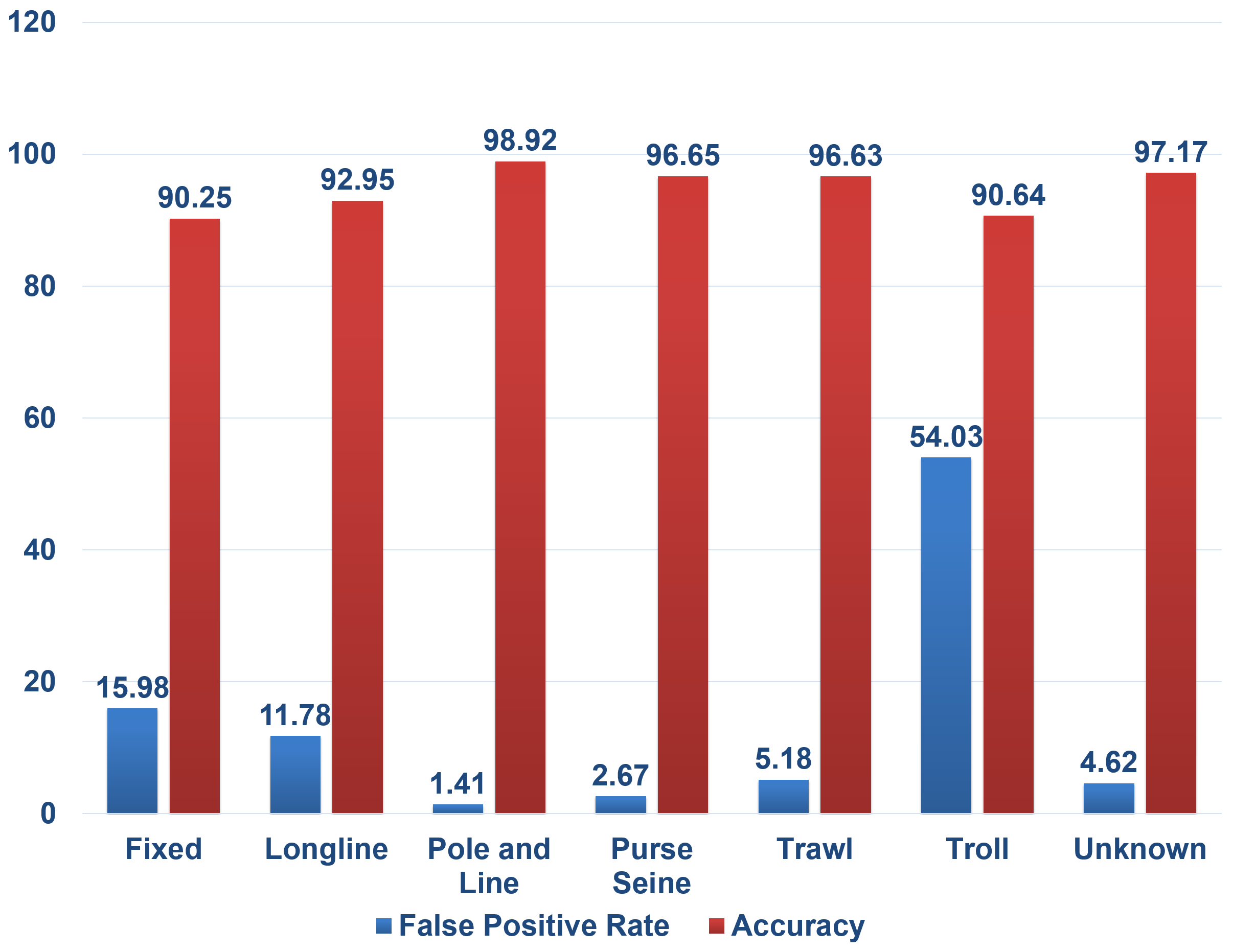

In this investigation Fairlearn [4], has been applied to implement the FPR disparity measurement experiments. As a point of reference, IBM AI Fairness 360 [6], applies a threshold of 10 on similar disparity metrics as the point beyond which the model is considered to be unfair in an interactive demo [7]. Results from the initial fishing detection model are shown in Figure 6. Due to an excessively high FPR for the troll gear type, there is an FPR disparity difference of 52.62. These results highlight an unacceptable level of bias present in the model which must be mitigated.

Bias Mitigation

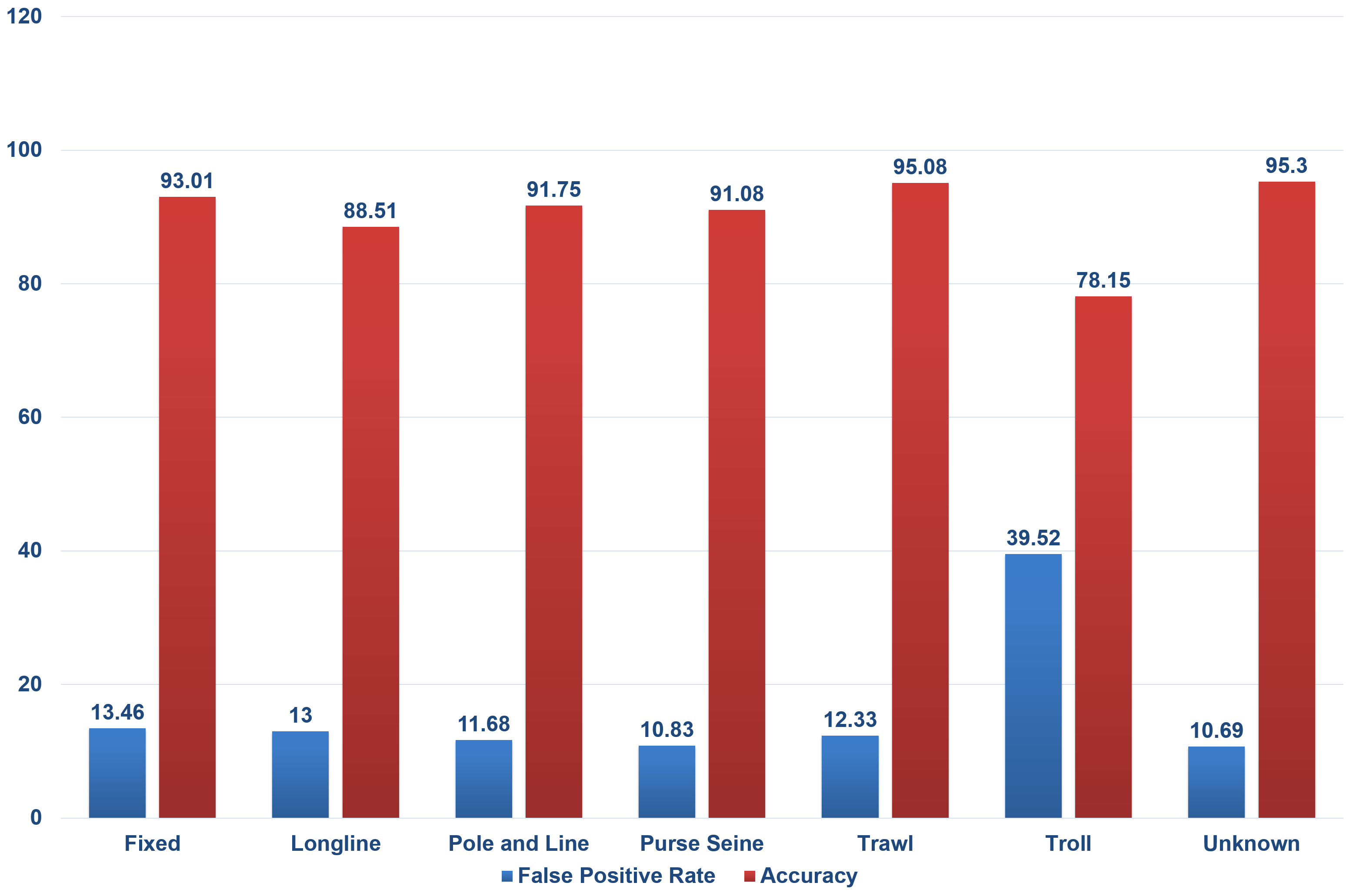

Bias mitigation algorithms implemented in Fairlearn and other similar tools can be applied at various stages of the ML pipeline. In general, there is a trade-off between model performance and bias such that mitigation algorithms induce a loss in model performance. Initial experimentation has demonstrated this occurrence, leading to a notable loss in performance to reduce bias. This can be observed in the results shown in Figure 7 where a mitigation algorithm has been applied to reduce the FPR disparity to 28.83 at the cost of a loss in fishing detection accuracy.

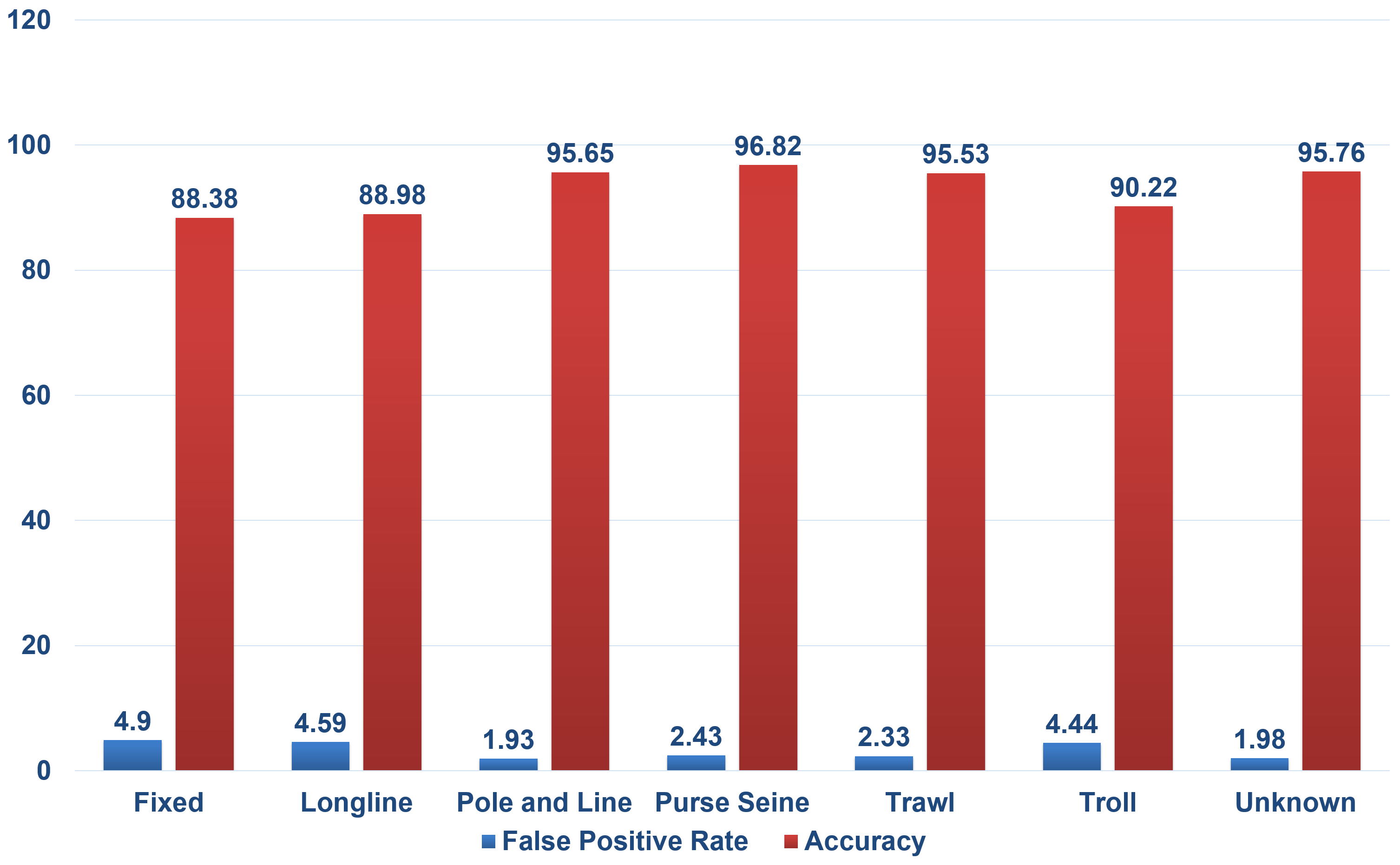

Based on these results, it was determined that efforts on bias mitigation should be focused in the data cleaning and preparation stages of the ML pipeline as this is the earliest possible point where this can be achieved. Through additional exploratory data analysis, notable issues were identified in terms of disproportionate representation across gear types and imbalance between positive and negative instances of fishing activity for some gear types. Through adjustments to the data cleaning process as well as the application of techniques such as data balancing and data augmentation, a new version of the model training data, better suited to the task of bias mitigation, was produced. The results of these modifications can be seen in Figure 8. The FPR has been greatly reduced across all gear types while preserving an acceptable level of fishing detection accuracy. Notably, the FPR disparity difference has been reduced from its original value of 52.62 down to 2.97.

Bias Assessment Next Steps

The results of this investigation have demonstrated successful identification and mitigation of bias present in an automated decision system. It is important to note that there may be diverse possible interpretations of harms and protected attributes. Furthermore, the bias and fairness of a system may drift over time. Continued monitoring is essential to identify potential unfairness both due to previously unidentified sources of bias or through drift in the system. A continuation of this investigation into a field study stage will address these tasks.

The Path Forward

Responsible AI is the only way to mitigate AI risks, and bias risks are considered a subset of such risks. As DFO moves towards adopting AI to support decision-making and improve service delivery, there is a need to ensure that these decisions are not only bias-aware, but also accurate, human-centric, explainable, and privacy-aware.

DFO is in the process of defining guiding principles to guide the development of AI applications and solutions. Once defined, various tools will be considered and/or developed to operationalize such principles.

Bibliography

- ↑ V. Fomins, "The Shift from Traditional Computing Systems to Artificial Intelligence and the Implications for Bias," Smart Technologies and Fundamental Rights, pp. 316-333, 2020.

- ↑ 2.0 2.1 A. Jobin, M. Ienca and E. Vayena, "The global landscape of AI ethics guidelines," Nature Machine Intelligence, p. 389–399, 2019

- ↑ S. Sicular , E. Brethenoux , F. Buytendijk and J. Hare, "AI Ethics: Use 5 Common Guidelines as Your Starting Point," Gartner, 11 July 2019. [Online]. Available: https://www.gartner.com/en/documents/3947359/ai-ethics-use-5-common-guidelines-as-your-starting-point. [Accessed 23 August 2021].

- ↑ 4.0 4.1 4.2 S. Bird, M. Dudík, R. Edgar, B. Horn, R. Lutz, V. Milan, M. Sameki, H. Wallach and K. Walker, "Fairlearn: A toolkit for assessing and improving fairness in AI," Microsoft, May 2020. [Online]. Available: https://www.microsoft.com/en-us/research/publication/fairlearn-a-toolkit-for-assessing-and-improving-fairness-in-ai/. [Accessed 30 11 2021].

- ↑ http://www.datasciencepublicpolicy.org/our-work/tools-guides/aequitas/

- ↑ 6.0 6.1 6.2 IBM Developer Staff, "AI Fairness 360," IBM, 14 November 2018. [Online]. Available: https://developer.ibm.com/open/projects/ai-fairness-360/. [Accessed 28 July 2021].