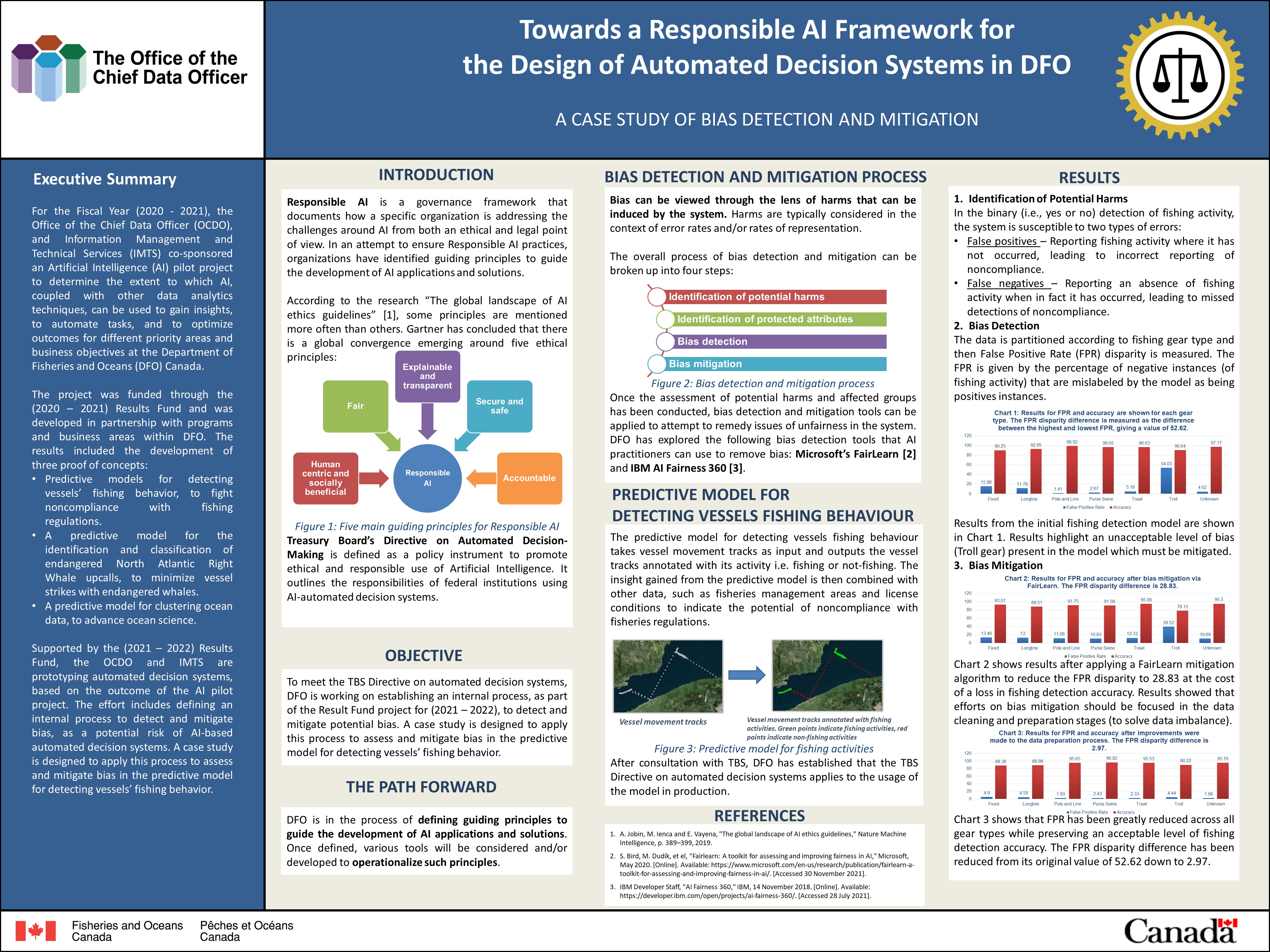

Towards a Responsible AI Framework for the Design of Automated Decision Systems in DFO: a Case Study of Bias Detection and Mitigation

Executive Summary

Automated Decision Systems (ADS) are computer systems that automate, or assist in automating, part or all of an administrative decision-making process. Currently, automated decision systems driven by Machine Learning (ML) algorithms are being used by the Government of Canada to improve service delivery. The use of such systems can have benefits and risks for federal institutions, including the Department of Fisheries and Oceans Canada (DFO). There is a need to ensure that automated decision systems are deployed in a manner that reduces risks to Canadians and federal institutions and leads to more efficient, accurate, consistent, and interpretable decisions.

For the Fiscal Year (2020 - 2021), the Office of Chief Data Officer (OCDO), and Information Management and Technical Services (IMTS) co-sponsored an Artificial Intelligence (AI) pilot project to determine the extent to which AI, coupled with other data analytics techniques, can be used to gain insights, to automate tasks, and to optimize outcomes for different priority areas and business objectives at DFO. The project was funded through the 2020-21 Results Fund and was developed in partnership with programs and business areas within DFO. The results included the development of three proof of concepts:

- Predictive models for detecting vessels’ fishing behavior, to fight noncompliance with fishing regulations.

- A predictive model for the identification and classification of endangered North Atlantic Right Whale upcalls, to minimize vessels’ strike to endangered whales.

- A predictive model for clustering ocean data, to advance ocean science.

Supported by the (2021 – 2022) Results Fund, the OCDO and IMTS are prototyping automated decision systems, based on the outcome of the AI pilot project. The effort includes defining an internal process to detect and mitigate bias, as a potential risk of ML-based automated decision systems. A case study is designed to apply this process to assess and mitigate bias in the predictive model for detecting vessels’ fishing behavior.

Eventually, in the long term, the goal will be to develop a comprehensive responsible AI framework to ensure responsible use of AI within DFO and to ensure compliance with Treasury’s Board directive on automated decision making.